Autonomous Search And Rescue Drone

July 2018 - August 2020

Check out my tutorial HERE

Introduction

Between 1992 and 2007, around 11 Search and Rescue (SAR) operations took place each day in the U.S. National Parks. Each costing about $895 per incident and totaling to $58,572,164. Furthermore, around 700 lives are lost per year from coast guard SAR missions. Better training and technological advancements have helped the decline in fatalities and costs, but it is still relatively expensive. Therefore, there is a need for a novel device that would minimize the fatality rate and costs of SAR operations.

This device is a drone that can successfully fly autonomously over any terrain and search for the missing subjects. The operator just has to plug in the battery, flip a switch, and will take off on a programmed SAR pattern configurable to any search area and pattern. A camera takes video of the ground below and feeds that input into a compute module to run a Convolutional Neural Network (CNN). This CNN is capable of detecting humans, cars, and several other objects that can provide clues for the SAR team to find the missing subject/s. When the drone detects a person, it will follow the subject if it moves and simultaneously send GPS coordinates with time stamps to the SAR team. The operator can also view the live video to coordinate how to reach the missing subject in the safest manner. When low on battery, the drone will autonomously fly back to where it took off. Utilizing a drone instead of having to use helicopters or land teams serves as a cheaper and safer way to perform SAR operations. Teams could use multiple of these drones and search a large area fast and autonomously.

Video

Short demonstration video displaying the drone's tracking capabilities.

Description

This is a drone that can successfully fly autonomously over any terrain and search for the missing subjects. The operator just has to plug in the battery, flip a switch, and will take off on a programmed SAR pattern configurable to any search area and pattern. A camera takes video of the ground below and feeds that input into a compute module to run a Convolutional Neural Network (CNN). This CNN is capable of detecting humans, cars, and several other objects that can provide clues for the SAR team to find the missing subject/s. When the drone detects a person, it will follow the subject if it moves and simultaneously send GPS coordinates with time stamps to the SAR team. The operator can also view the live video to coordinate how to reach the missing subject in the safest manner. When low on battery, the drone will autonomously fly back to where it took off. Utilizing a drone instead of having to use helicopters or land teams serves as a cheaper and safer way to perform SAR operations. Teams could use multiple of these drones and search a large area fast and autonomously.

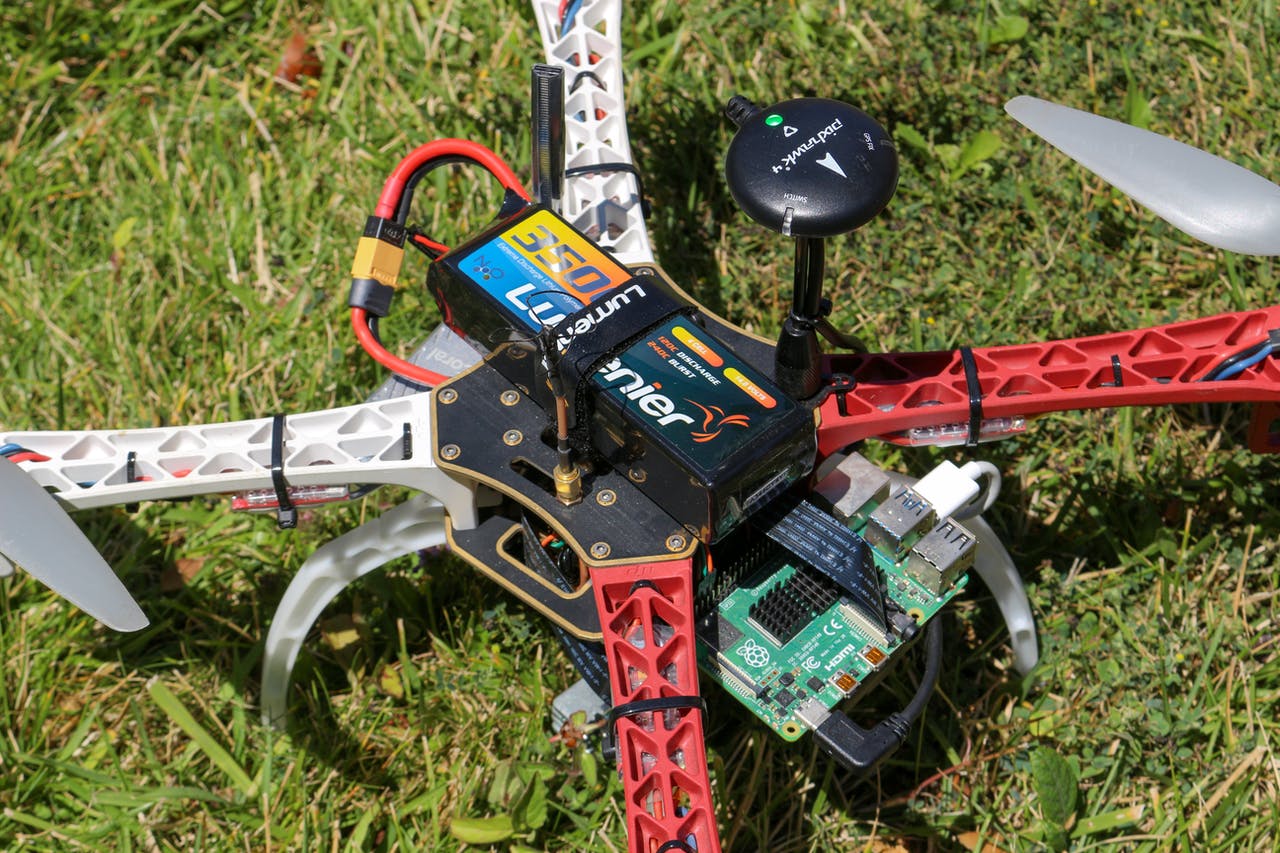

The drone uses a Raspberry Pi 4 Model B 4GB (RPI) to integrate the Pixhawk 4 Flight Controller, Google Coral USB Module, and other peripherals together. The Raspberry Pi is connected to the Coral USB Module via a USB 3.0 port allowing up to 625 MB/s transfer speeds. These speeds will be crucial for optimizing the frames per second of the object detection. A 5MP Camera Module is also connected to the RPI through a ribbon cable. The Pixhawk 4 is connected to the RPI via serial GPIO ports and communicates through the MAVLink protocol. A GPS Module is also connected to the Pixhawk 4 to triangulate the drone’s location at all times.

The drone is considered a quadcopter as it uses 4 motors for vertical lift. Each 2213-13 980 KV motor is connected to a 10’’ x 4.5’’ propeller and controlled via a 30A electric speed controller. A Power Distribution Board (PDB) is housed in the center of the quadcopter frame with the function of connecting all of the electrical components together to be powered by the 3500 mAh 120C 4 cell LiPo battery. Each of these components were chosen to allow the drone to get around 10 minute flight times and fly against strong winds.

The RPI camera module is mounted on a 360 degree gimbal which allows the camera to always be pointed in the same angle relative to the ground no matter the orientation of the aircraft. This allows the camera to always be fixed on the subject even if the drone has to go forward, stop, or go back. This camera footage is fed into a video transmitter and is broadcasted on a selected channel. Unfortunately, the RPI 4 doesn’t allow seamless connection from its camera module to a video transmitter so the device uses a separate camera for live video. The live video camera is still mounted on the gimbal so the footage between that and camera module are relatively the same. However, the live video camera also uses a wider field of view lens to allow the operator to capture a larger landscape compared to the RPI camera module. This camera has 650TVL resolution and is connected to a 5.8 GHz video transmitter to broadcast the live video on the 5.8 GHz band. This video is received via a 5.8 GHz video receiver on the same channel the transmitter is selected at and is displayed using a monitor or pair of goggles. Both the receiver and transmitter are capable of utilizing up to 48 channels in the 5.8 GHz band, allowing the operator to select the least interfered channel. The benefits of using the 5.8 GHz band is that it allows the drone to use a very compact antenna for both transmitting and receiving to minimize weight and increase durability. The drone uses a cloverleaf type antenna which provides a more circular coverage rather than directional. This allows the drone to fly in any orientation and get a strong, uninterrupted signal but with the downside of less range.

The drone uses a CNN model to perform the computer vision algorithms. This model is open sourced and is called MobileNet SSD v2. It is trained on the open source COCO dataset and optimized for fast detection of 90 types of objects (people, cars, etc.) on mobile devices or limited computation devices.

The model requires a lot of fast matrix multiplication calculations in order to recognize objects within the input video. To do this, the drone utilizes the Google Coral USB Module. This module is a small Tensor Processing Unit (TPU) that is specialized for computing matrices. This allows the drone to detect objects at around 24 frames per second which is sufficient enough to track fast subjects if necessary. The camera module feeds the video to the RPI which will use the TPU to detect objects within the video. The RPI then displays a green box around the detected subject along with its label and prediction confidence. Afterwards, the drone will proceed to follow the subject and send GPS coordinates to the operator.

Given the position of the green box/detected object, the RPI will calculate the center of that object and compare it to the center of the camera frame/video feed. The center of the circle is displayed on the video feed along with the green square around the object as explained earlier. If the center of the object is within -10 and 10 pixels in the y axis from the center frame, then the RPI will send a serial signal to the Pixhawk 4 flight controller to stop and hover in place. If the center of the object is over 10 pixels above the center of the frame, the RPI will tell the flight controller to move 1 m/s forward until the center of the object is within the -10 and 10 pixel interval. If the center of the object is over 10 pixels below, the drone will travel 1 m/s backwards accordingly. The amount of pixels in the y axis between the center frame and center of the object is displayed near the center frame circle. To reiterate, because a gimbal is used, the center of the frame will always be constant relative to the ground even if the drone is flying forward, backwards, or not at all.

The RPI also computes the angle at which the drone has to rotate in order for the object to be in the center of the frame in the x axis. It takes the inverse tangent of the x axis distance between the center of the object and center frame divided by the y axis distance between the center of the object and the bottom of the frame. If this calculated angle is between -10 and 10 degrees, then the RPI will send a serial signal to the flight controller to not rotate. However, if the center of the object is greater than 10 degrees right from the center bottom of the frame, the RPI will tell the flight controller to turn the drone to the right until it reaches the -10 and 10 degree interval. If the calculated angle is 10 degrees to the left, the drone will turn left accordingly. The calculated angle is displayed near the bottom center frame circle.

Both the forward, backward, and rotation signals are sent respectively after each frame in the video feed (sends these signals 24 times per second) which allows the drone to track fast moving objects if needed. It’s important to note that the speed of the object is based on the GPS speed and is not based on the angle the drone is tiltled forward or backward. This allows the drone to fly against or with the wind and still track the subject well.

Finally, once the battery runs below 40%, the drone will make its way back to its launch position. In a LiPo battery, when the battery is at 20% of its rated capacity, it is actually dead. Most likely, the quadcopter can make its way back home with the leftover 20%. However, in the case that it is unable to fly all the way back home due to strong winds, the quadcopter will slowly descend and land. The battery can’t provide enough power to fly, but it does have enough power for the quadcopter to still send the GPS coordinates to the operator’s radio transceiver via the 2.4 GHz transceiver on the drone.

Putting this all together, the operator simply has to power on the drone and flip the safety switch to allow the drone to take off. After, the drone will launch and begin its SAR pattern. By default, the drone performs a standard square pattern over a 60,000 square foot area. This can be configured to a different pattern and different area. The operator can also view the live footage via a video receiver and monitor. If the subject is not found, then the drone will go back to home and the operator can choose to launch it elsewhere with a fresh battery. In the case that the subject is found, the drone will track the subject and provide GPS coordinates of the location. The live video will provide an aerial view of the terrain to plan the safest route. If the terrain is more complicated, the operator can call the drone back home by flipping a switch on the radio transmitter. The video of the drone’s trip is recorded on the RPI’s SD card and can be uploaded into a 3D mapping software to map out the full terrain.

It would be ideal for a SAR operation to use multiple of these drones to search a larger area. In the end, this drone allows SAR teams to utilize technology that can search for a missing subject faster, cheaper, and safer than traditional strategies.