Recycle Sorting Robotic Arm With Computer Vision

June 2019 - July 2019

Story

Did you know that the average contamination rate in communities and businesses range up to 25%? That means one out of every four pieces of recycling you throw away doesn’t get recycled. This is caused due to human error in recycling centers. Traditionally, workers will sort through trash into different bins depending on the material. Humans are bound to make errors and end up not sorting the trash properly, leading to contamination. As pollution and climate change become even more significant in today’s society, recycling takes a huge part in protecting our planet. By using robots to sort through trash, contamination rates will decrease drastically, not to mention a lot cheaper and more sustainable. To solve this, I created a recycle sorting robot that uses machine learning to sort between different recycle materials.

Demo Video

Code

Please clone my GitHub repository to follow along with this tutorial.

Components List

3D Parts

| Qty | Part |

|---|---|

| x2 | Gripper |

| x1 | Gripper Base |

| x4 | Gipper Link |

| x1 | Gear 1 |

| x1 | Gear 2 |

| x1 | Arm 1 |

| x1 | Arm 2 |

| x1 | Arm 3 |

| x1 | Waist |

| x1 | Base |

Getting the Data

To train the object detection model that can detect and recognize different recycling materials, I used the trashnet dataset which includes 2527 images:

- 501 glass

- 594 paper

- 403 cardboard

- 482 plastic

- 410 metal

- 137 trash

Here is an example image:

This dataset is very small to train an object detection model. There are only about 100 images of trash that are too little to train an accurate model, so I decided to leave it out.

Make sure to download the dataset-resized.zip file. It contains the set of images that are already resized to a smaller size to allow for faster training. If you would like to resize the raw images to your own liking, feel free to download the dataset-original.zip file.

Labeling the Images

Next, we need to label several images of different recycling materials so we can train the object detection model. To do this, I used labelImg, free software that allows you to label object bounding boxes in images.

Label each image with the proper label. This tutorial shows you how. Make sure to make each bounding box as close to the border of each object to ensure the detection model is as accurate as possible. Save all the .xml files into a folder.

Here is how to label your images:

This is a very tedious & mind-numbing experience. Thankfully for you, I already labeled all the images for you! You can find it here.

Training

In terms of training, I decided to use transfer learning using Tensorflow. This allows us to train a decently accurate model without a large amount of data.

There are a couple of ways we can do this. We can do it on our local desktop machine on the cloud. Training on our local machine will take a super long time depending on how powerful your computer is and if you have a powerful GPU. This is probably the easiest way in my opinion, but again with the downside of speed.

There are some key things to note about transfer learning. You need to make sure that the pre-trained model you use for training is compatible with the Coral Edge TPU. You can find compatible models here. I used the MobileNet SSD v2 (COCO) model. Feel free to experiment with others too.

To train on your local machine, I would recommend following Google's tutorial or EdjeElectronics tutorial if running on Windows 10. Personally, I have tested EdjeElectroncs tutorial and reached success on my desktop. I can not confirm if Google's tutorial will work, but I would be surprised if it doesn't.

To train in the cloud, you can use AWS or GCP. I found this tutorial that you can try. It uses Google's cloud TPU's that can train your object detection model super fast. Feel free to use AWS as well.

Whether you train on your local machine or in the cloud, you should end up with a trained tensorflow model.

Compiling the Trained Model

In order for your trained model to work with the Coral Edge TPU, you need to compile it.

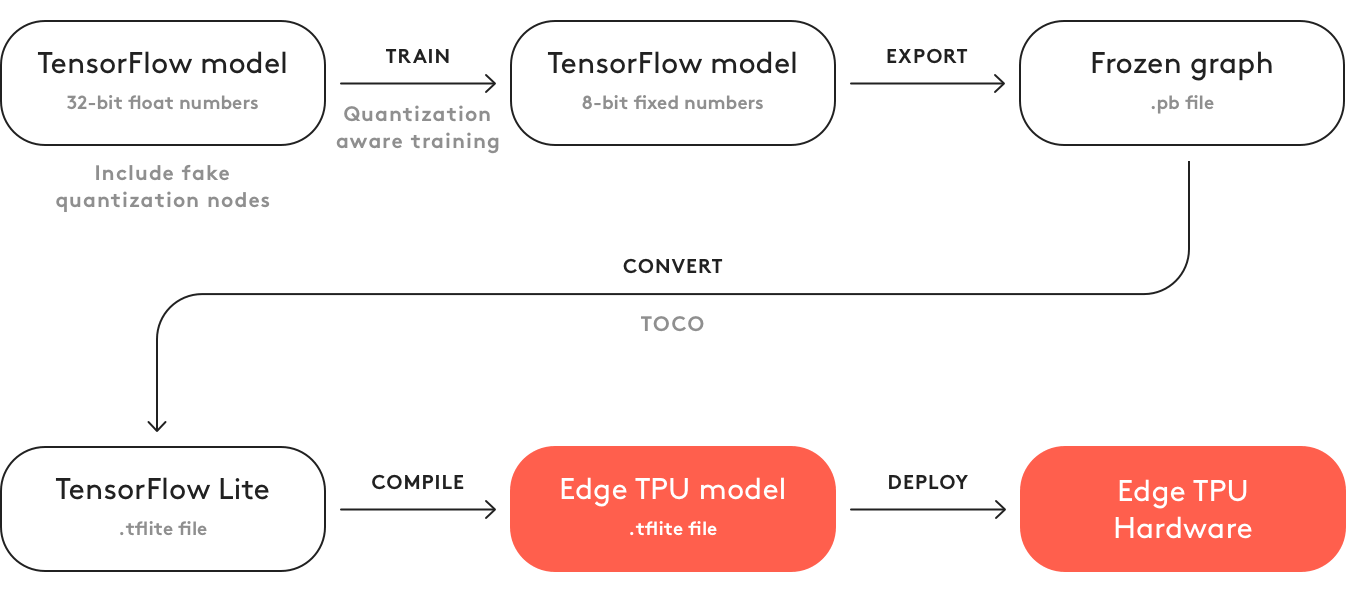

Here is a diagram for the workflow:

After training, you need to save it as a frozen graph (.pb file). Then, you need to convert it into a Tensorflow Lite model. Note how it says "Post-training quantization". If you used the compatible pre-trained models when using transfer learning, you don't need to do this. Take a look at the full documentation on compatibility here.

With the Tensorflow Lite model, you need to compile it to an Edge TPU model. See details on how to do this here.

Deploy the Model

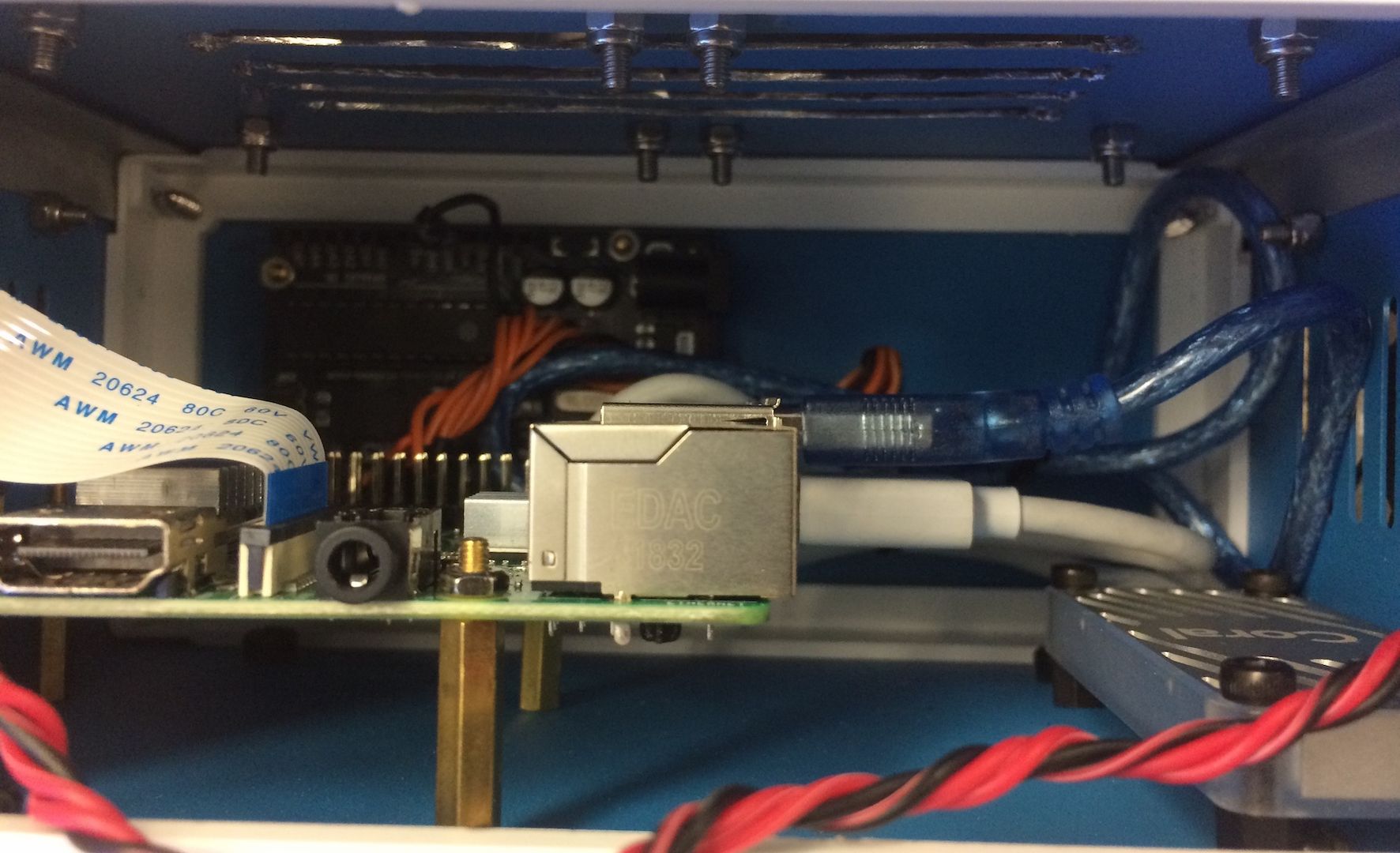

The next step is to set up the Raspberry Pi (RPI) and Edge TPU to run the trained object detection model.

First, set up the RPI using this tutorial.

Next, set up the Edge TPU following this tutorial.

Finally, connect the RPI camera module to the raspberry pi.

You are now ready to test your object detection model!

If you cloned my repository already, you will want to navigate to the RPI directory and run the test_detection.py file:

python test_detection.py --model recycle_ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03/detect_edgetpu.tflite --labels recycle_ssd_mobilenet_v2_quantized_300x300_coco_2019_01_03/labels.txt

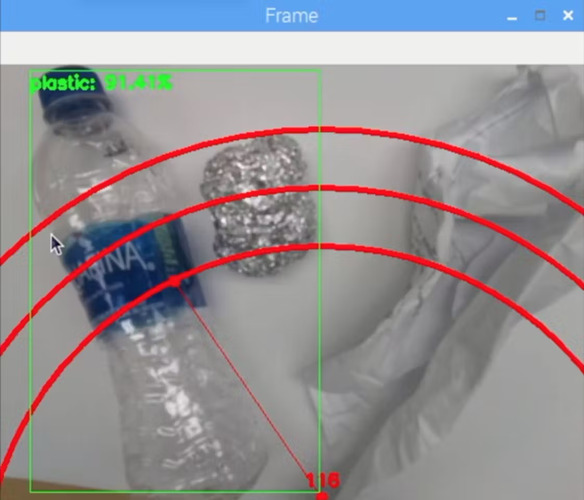

A small window should pop up and if you put a plastic water bottle or other recycle material, it should detect it like this:

Press the letter "q" on your keyboard to end the program.

Build the Robotic Arm

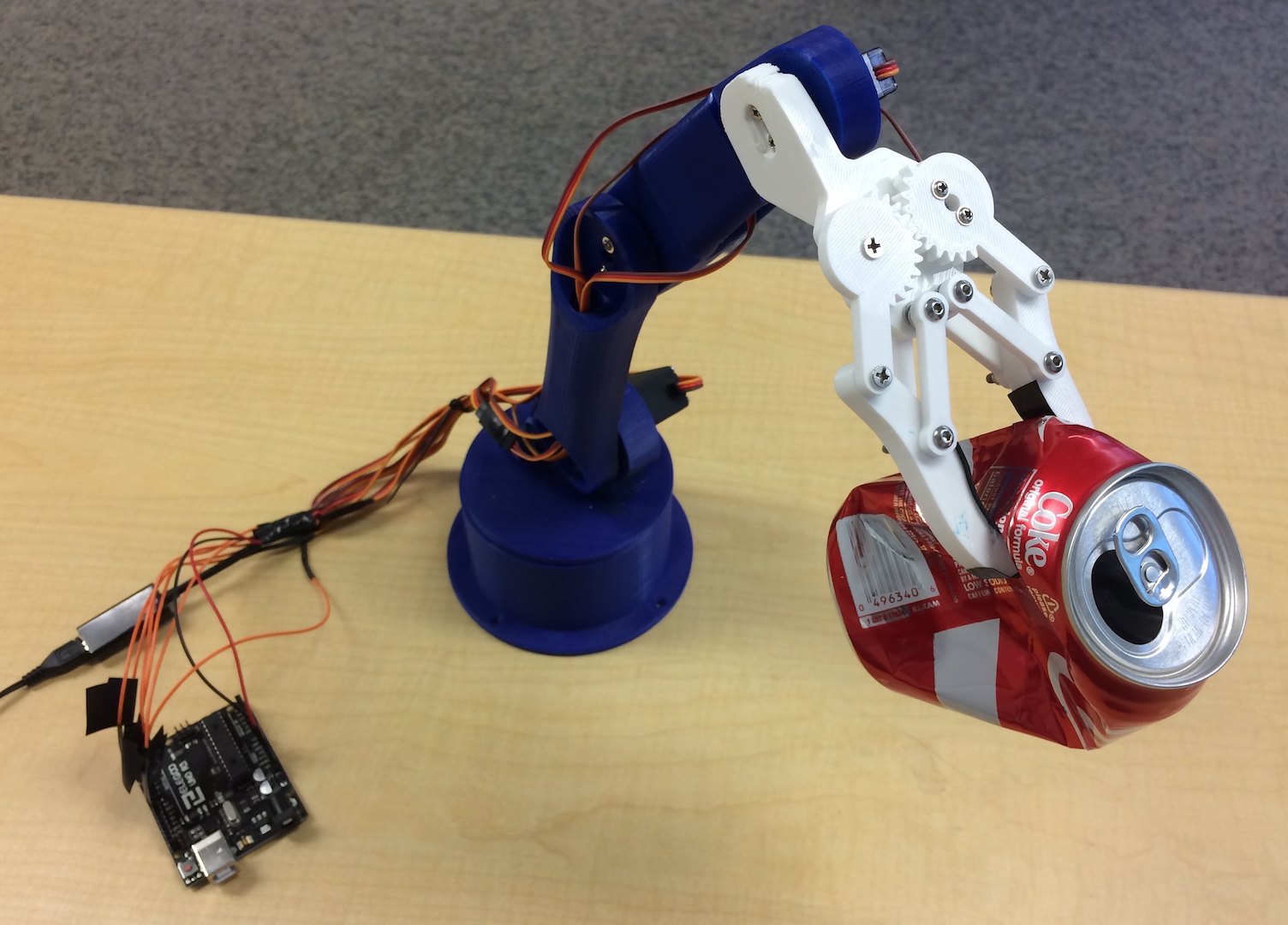

The robotic arm is a 3D printed arm I found here. Just follow the tutorial on setting it up.

This is how my arm turned out:

Make sure you connect the servo pins to the according to Arduino I/O pins in my code. Connect the servos from bottom to top of the arm in this order: 3, 11, 10, 9, 6, 5. Not connecting it in this order will cause the arm to move the wrong servo!

Test to see it working by navigating to the Arduino directory and running the basicMovement.ino file. This will simply grab an object that you place in front of the arm and drop it behind.

Connecting the RPI and Robotic arm

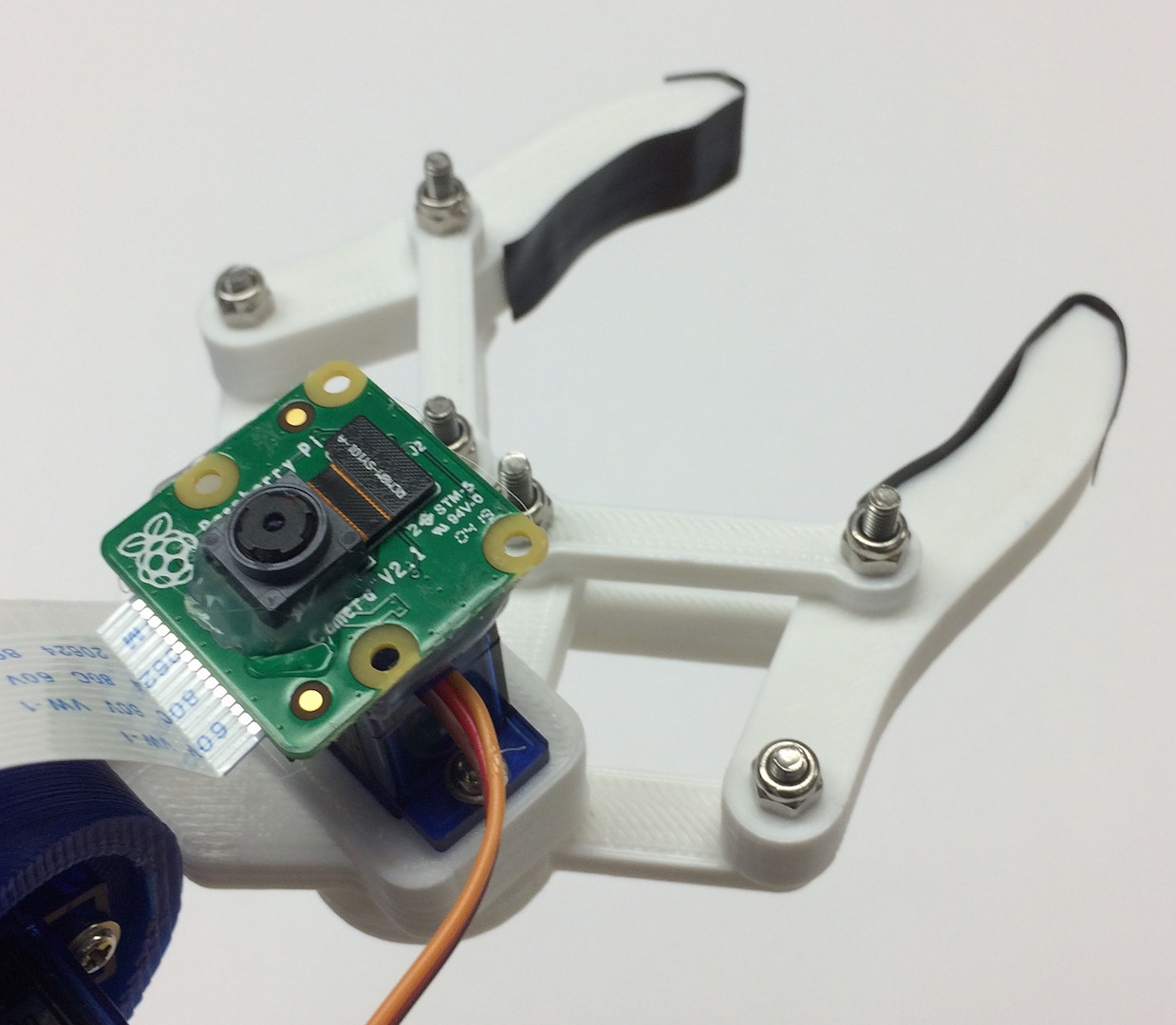

We first need to mount the camera module to the bottom of the claw:

Try to align the camera as straight as possible to minimize errors in grabbing the recognized recycle material. You will need to use the long camera module ribbon cable as seen in the materials list.

Next, you need to upload the roboticArm.ino file to the Arduino board.

Finally, we just have to connect a USB cable between the RPI's USB port and the Arduino's USB port. This will allow them to communicate via serial. Follow this tutorial on how to set this up.

Final Touches

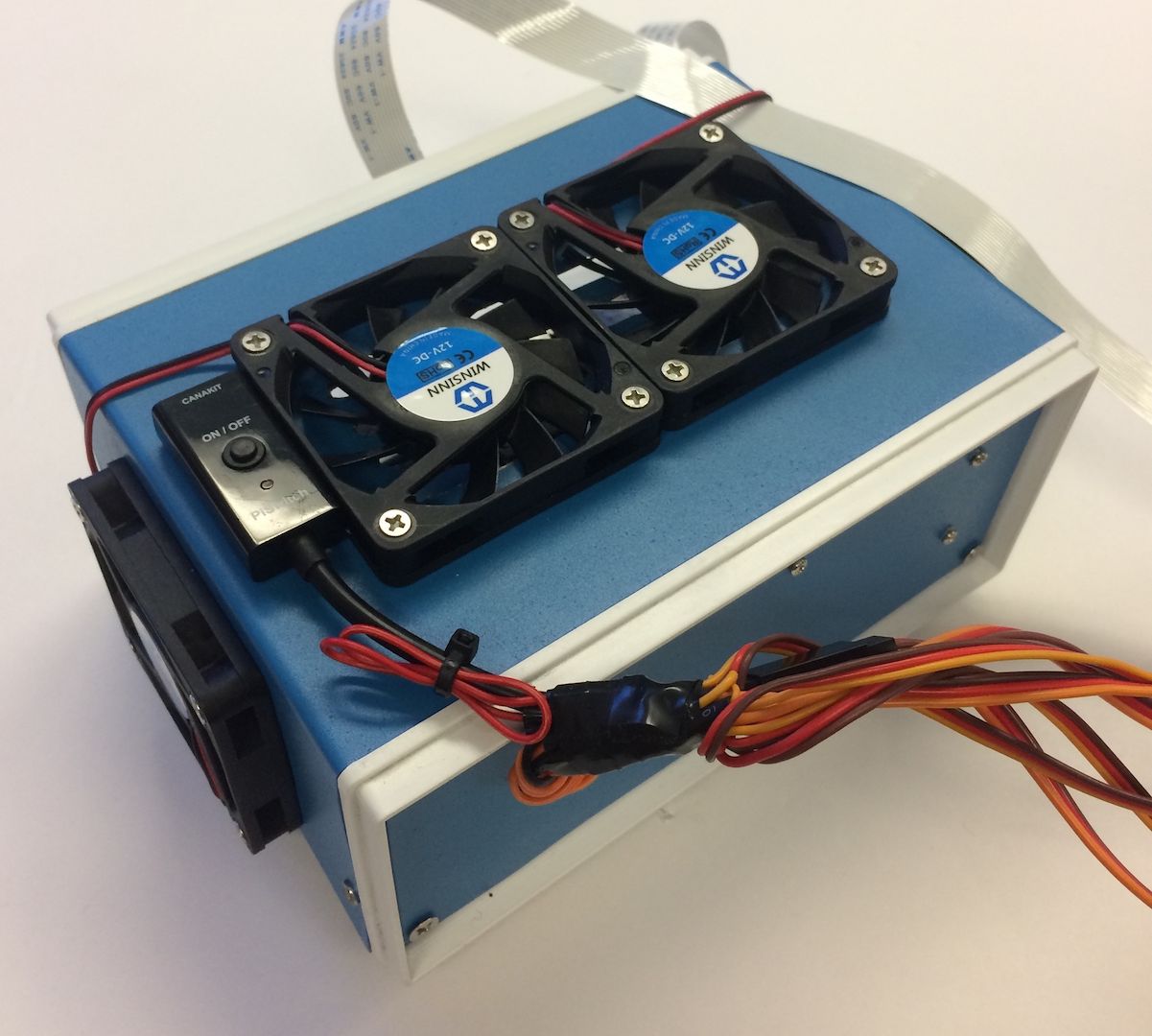

This step is completely optional but I like to put all my components into a nice little project box.

Here is how it looks:

You can find the project box on the materials list. I just drilled some holes and used brass standoffs to mount the electronics. I also mounted 4 cooling fans to keep a constant airflow through the RPI and TPU when hot.

Running

You are now ready to power on both the robotic arm and RPI! On the RPI, you can simply run the recycle_detection.py file. This will open a window and the robotic arm will start running just like in the demo video! Press the letter "q" on your keyboard to end the program.

Feel free to play around with the code and have fun!

Future Work

I hope to use R.O.S. to control the robotic arm with more precise movements. This will enable more accurate picking up of objects.